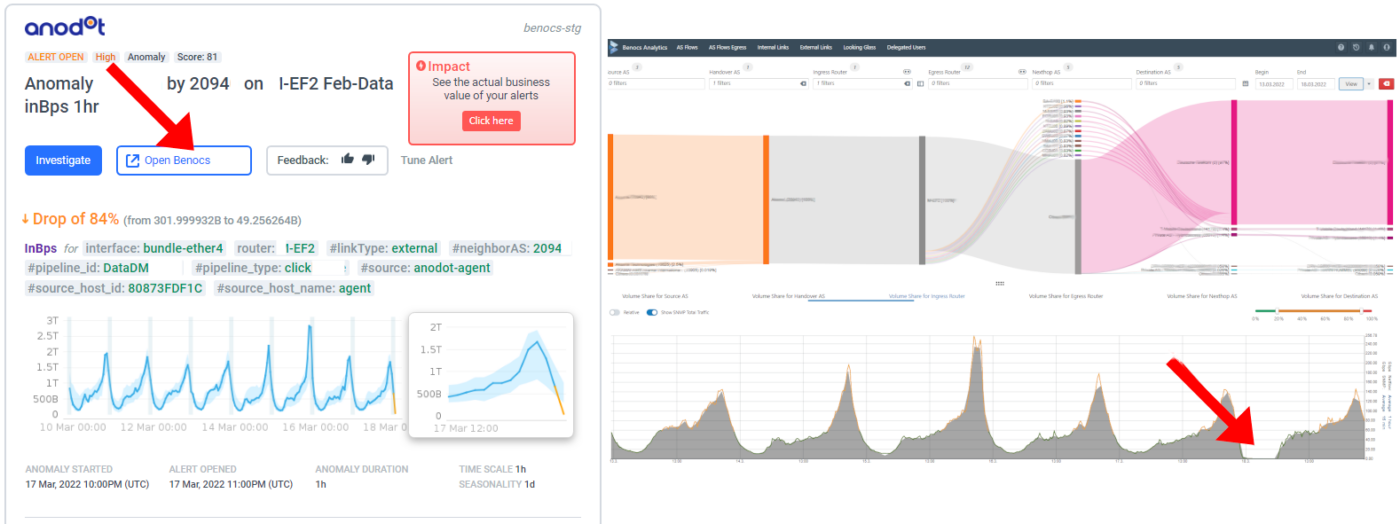

“We would like to test BENOCS Analytics tomorrow with our live data. Is that possible?”

That’s a question we’ve often heard from interested customers who want to understand how our analytics performs on live network data, not in a generic demo.

Until now, that wasn’t easy to deliver. Our proof-of-concept deployments used the full BENOCS architecture, and while that ensured accurate results, it also meant setup times of six to eight weeks. It also required hardware procurement, exporter configuration, security configuration, and coordination between multiple teams. The outcome was reliable, but the waiting time slowed evaluation and engagement.

Now, that’s changing. We’ve built a new Kubernetes-based trial environment that allows customers to test BENOCS Analytics with their own NetFlow, BGP, SNMP, and DNS data in a standardized environment within two weeks. Each trial runs in a dedicated namespace with its own set of BENOCS pods. Rest assured, each namespace is separated from all other, keeping your data fully within the space of your test environment.

By using Kubernetes pods, BENOCS to keeps the integrity of a full deployment at a smaller scale but considerable reduces time to see results in Analytics. It’s still your data, your network, your insights but available much faster through a smarter and automated setup.

Why we changed the way trials work

Over the past years, BENOCS Analytics has grown into a comprehensive visibility platform used by operators worldwide. But as our deployments matured, we noticed that the proof-of-concept stage became a bottleneck. Customers wanted to explore analytics capabilities sooner, while our team needed to maintain the quality and accuracy of a full deployment. We couldn’t compromise on either.

So, the question became:

“How do we make trials faster without compromising on accuracy?“

The answer came through containerization and automation. By moving the trial architecture into Kubernetes, we removed the dependency on manually provisioned systems, reduce complexity for the customer and made trials far faster to set up.

How the new trial works

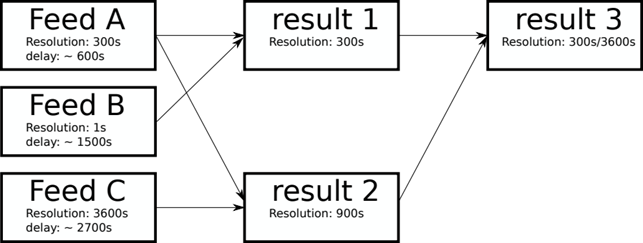

The customer connects their network via an encrypted IPsec connection. Through this all data can be exported directly via the Internet to the trial setup.

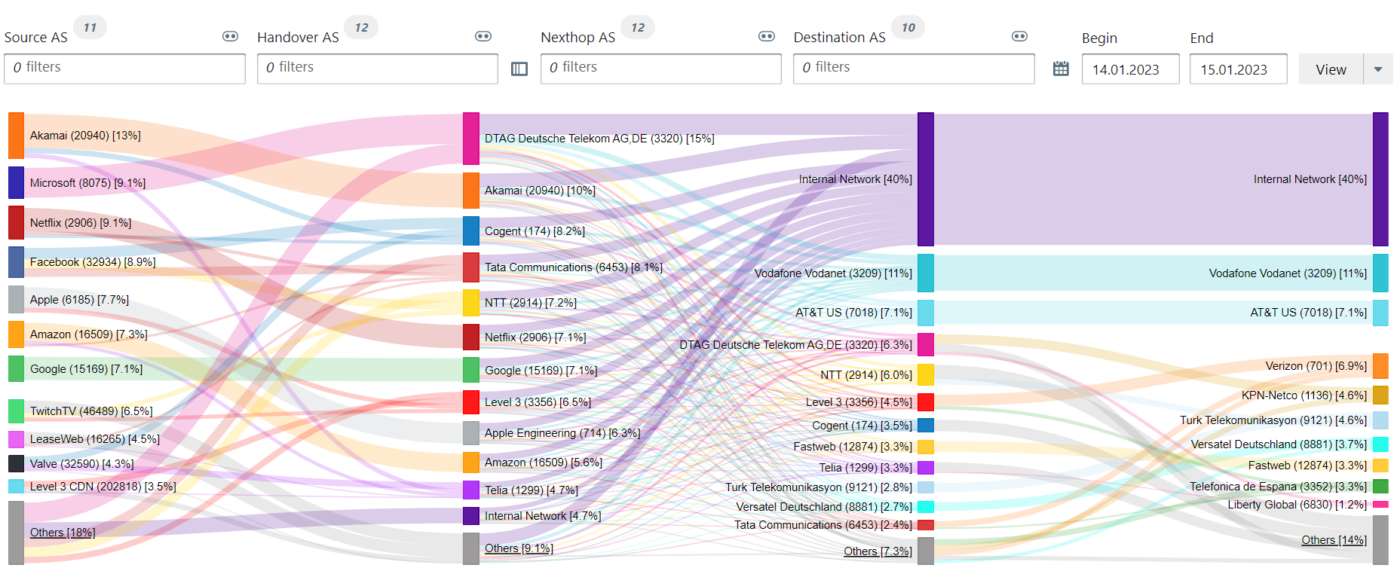

- Flow (Netflow, sflow, IPFIX, etc.) sampled data plane exports for traffic visibility

- BGP sessions for exporting the FIB (Forward information Base)

- This also allows for topology information via BGP-LS

- SNMP/Telemetry for device counters and configuration

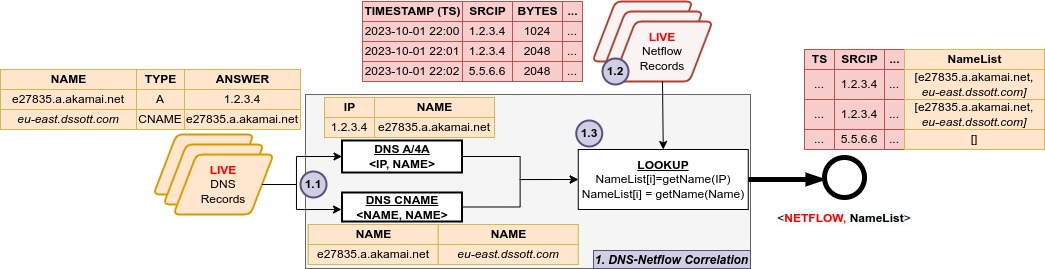

- Cache missDNS data via dnstap used for application tagging and identification

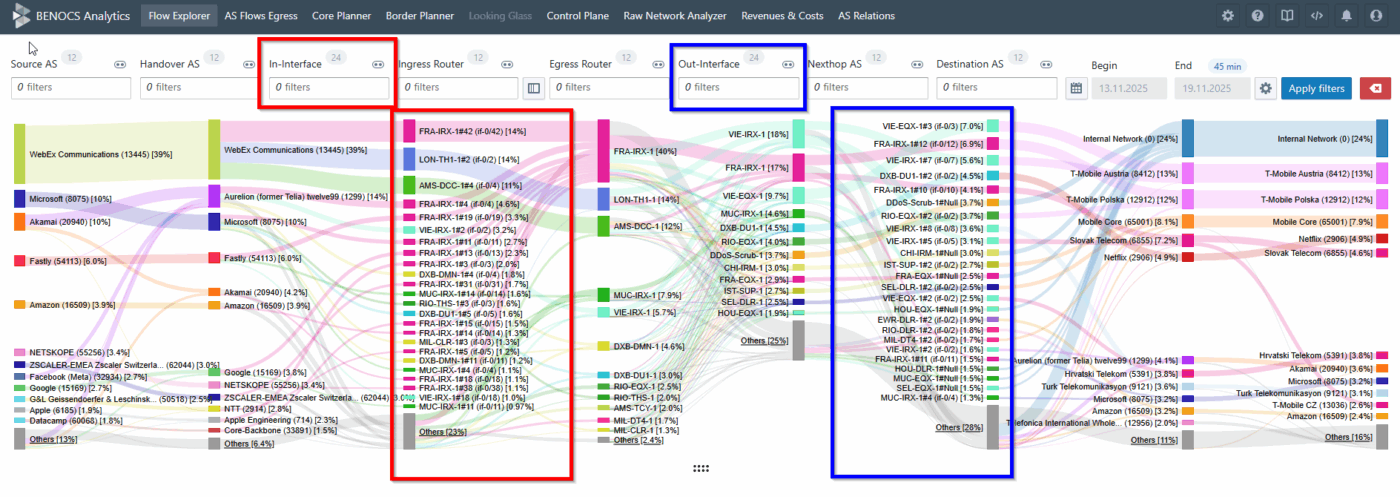

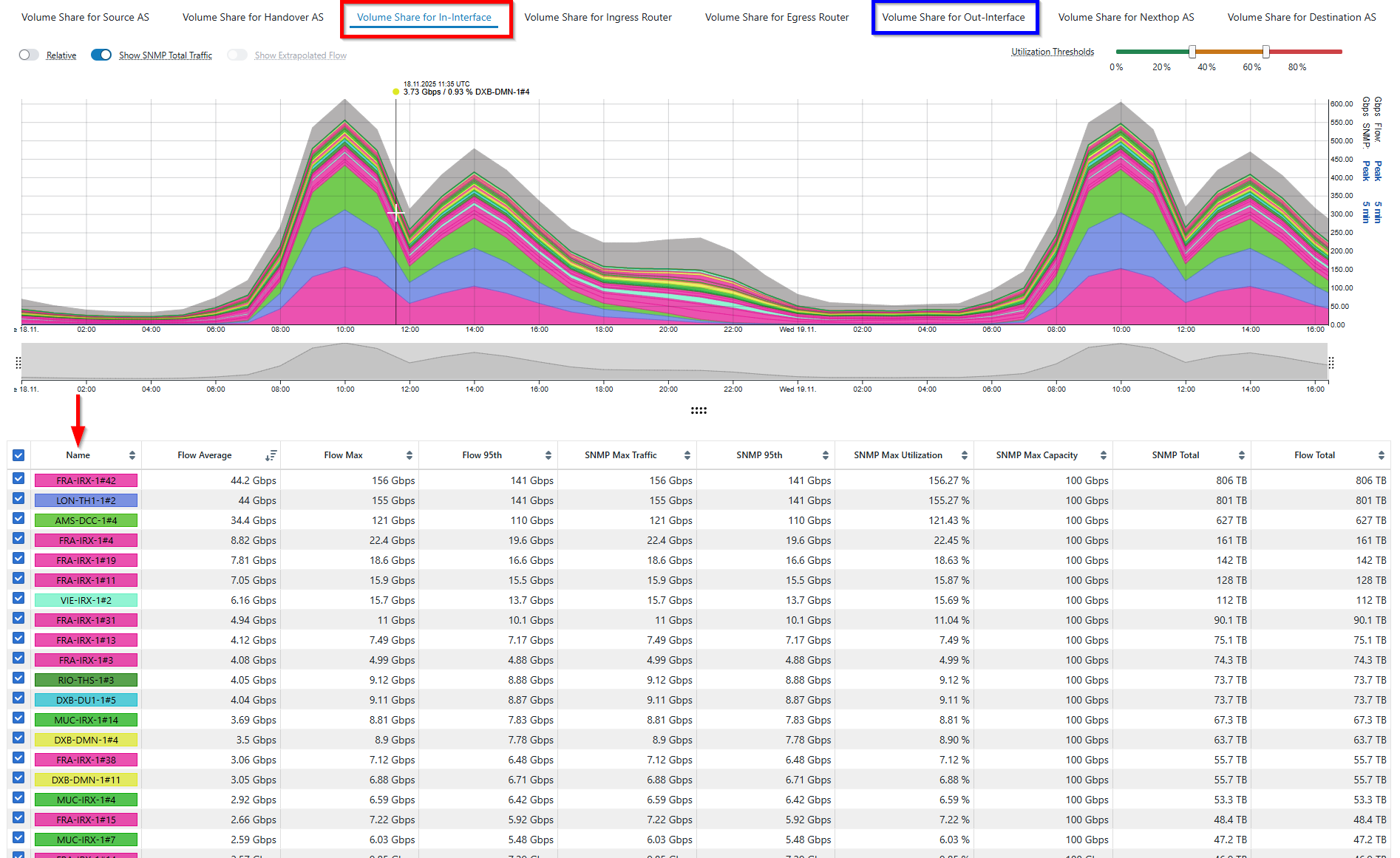

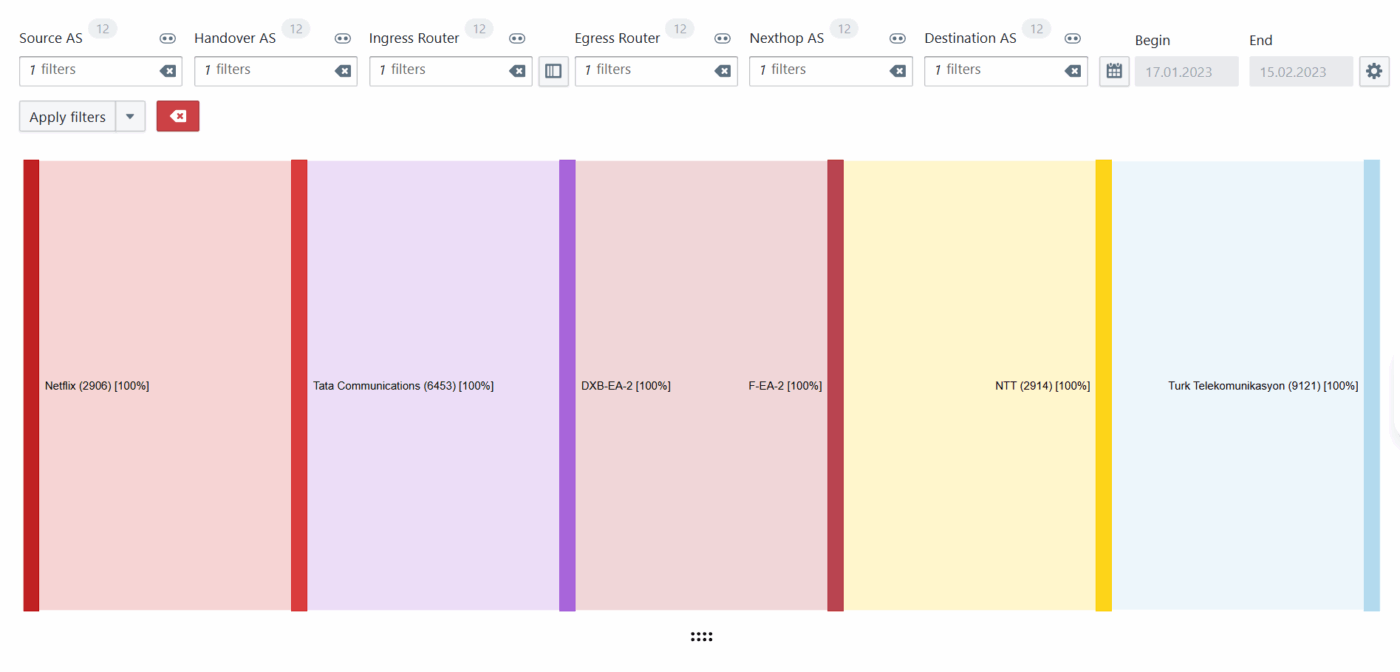

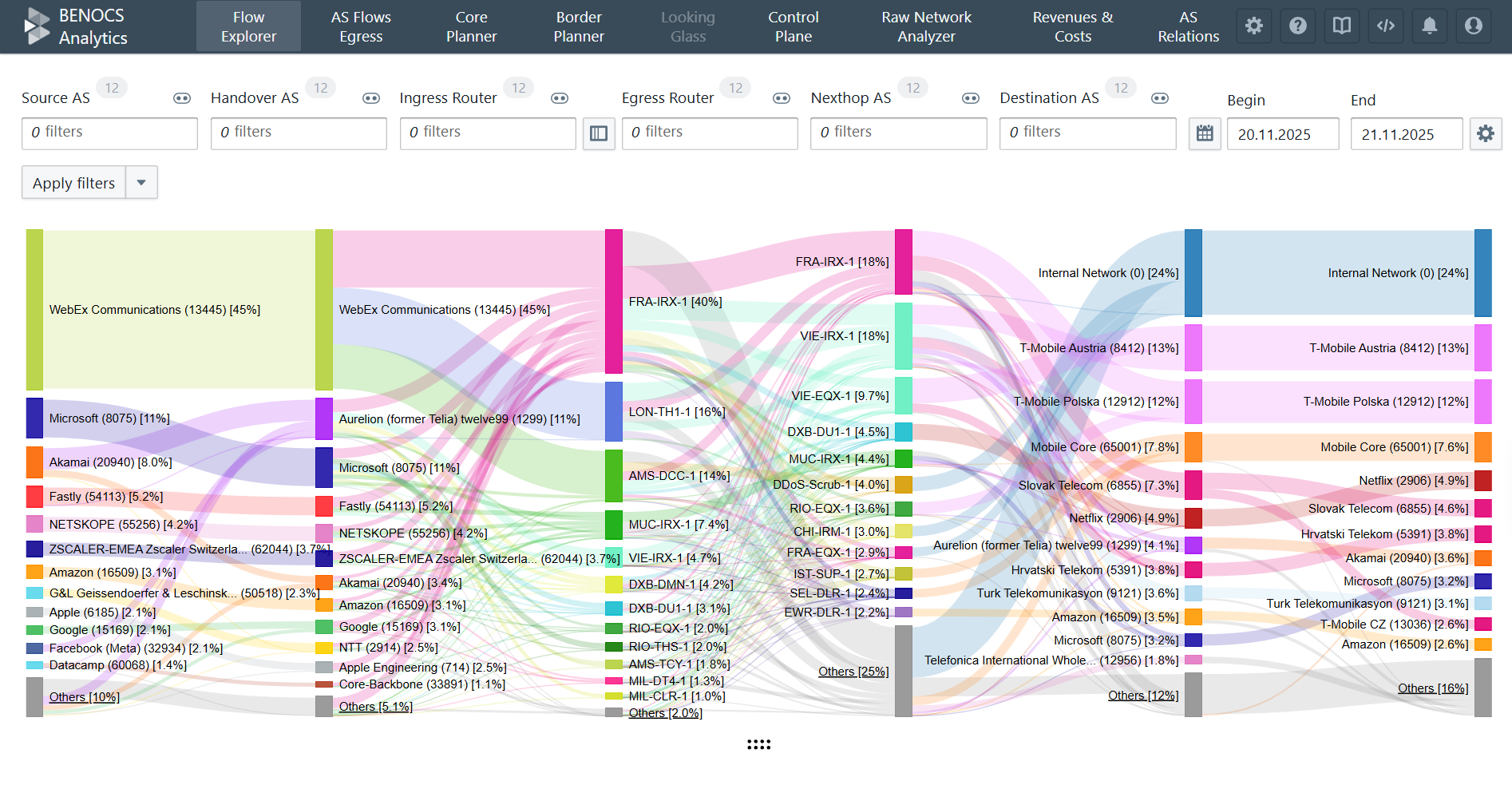

All incoming data flows into our backend structure just like any production environment, which powers BENOCS Analytics. Within a few days of setting up the data feeds, most customers see live analytics in their environment.

The technical foundation: why Kubernetes

Kubernetes gives us the flexibility to create, move, update and decommission trial environments quickly while keeping them isolated and predictable. Each customer trial is effectively a self-contained version of BENOCS Analytics that runs on shared compute but with strict network and data separation.

- Automation: Kubernetes handles deployment, scaling, and health checks automatically.

- Consistency: Every trial uses the same container images, infrastructure code, and configuration templates, reducing variability.

- Resilience: failures on any level can be mitigated fast and automatically

- Scalability: Extending trails or adding more trails – Kubernetes allows for a lot of flexibility.

- Efficiency: Shared resources mean lower overhead without sacrificing performance or security.

By combining containerization and infrastructure-as-code, we now spin up customer trials in days instead of weeks with no compromise on security or stability.

What customers need to provide

To start a trial, here’s what we require:

-

- A /29 IPv4 subnet from your Network. These Addresses will be routed via the IPSec tunnel and are assigned to Pods, allowing direct access to the trial infrastructure from your network.

- Basic network infrastructure information such as Loopback addresses and Names of the routers the test is run with. Each router will need information from:

NOTE: The trial is limited to certain routers. A full deployment does not need this information anymore as will pick up the entire network via IGP/BGP-LS.

- (optional) Export of DNS Cache misses as a dnstap stream

Once these details are shared, our DevOps team uses automated infrastructure code to generate the customer configuration and deploy the stack. When the IPsec tunnel is up and the sufficient data has arrived to draw statisticial conclusion (usually around 48 hours), dashboards start populating automatically.

What we can support

Each trial is designed to balance performance and resource efficiency. As such, trials are limited to:

- Up to 6 edge routers

- Up to 50 external peerings

- Peak traffic depending on the sampling rate

- 2 Tbps at 1 : 10000

- 200 Gbps at 1 : 1000

- 20 Gbps at 1 : 100

- Flow volume target under 2 MByte/s or 16MBit/sec

- 60 days of historical data

This ensures consistency across customers while keeping the experience close to production quality.

The road ahead

The Kubernetes trial program is now ready for onboarding. Our goal is to make it effortless for you to experience BENOCS Analytics in a fast, secure, and reliable environment.

If your team has been curious about BENOCS but waiting for the right window to try it, that window just opened. Reach out to sales@benocs.com or any one of the BENOCS team to get started.